When discussing about the potential problems and limitations of artificial intelligence models, one of the topics typically considered (and with a good reason) is that of bias. In a nutshell, one would expect that the decisions made an AI system do not depend on some externalities of the input. So, when dealing with human beings, decisions should not depend on gender, race, religion, etc. This has led to some kind of demonisation of the term “bias,” and extensive work trying to remove it from existing models (and the data that is used to create them).

In reality, not all bias is equal (in fact, all data is skewed in one way or another), and current efforts to remove it conflate two different situations which I call “model bias” (which we want to avoid) and “domain bias” (which is informative, and perhaps important to keep).

Consider one of the poster examples of bias in AI decision-making: deciding whether to assign a loan to a person or not. The narrative of the example typically works like this: two people, one male and one female, with the same job, the same kind of education, and same salary ask for the same loan. The model grants it to the male, but rejects it for the female. The model is biased! We should fix it!

While it is certainly true that the model is biased, what I claim here is that this is not an issue of the model, but rather of the world it is used on (hence, "domain bias"). Before I get in trouble for this statement, let me explain. A system used to support the loan-assignment decision process is not trying to classify individuals as they are now. Rather, it is trying to predict how they will be in the future: will they be able to pay back the money in the next 20 years? Unfortunately, we live in a society where females have less opportunities in their career development, which translates into a lower likelihood of being able to pay in the long term. The negative view on the female client is caused by the domain (in this case the society) and not by the model itself. A good, accurate, prediction model should be able to consider this fact, and people developing the model should not try to remove this bias from it. That would just make it a less reliable model.

Now, that does not mean that we should just accept the bias and walk on as if nothing happened. Previously I said that domain bias could be informative and useful, and I meant it. In the case of this example, it is this domain bias that lets us know (if we had not already seen it before) that there is something wrong with society, and that change is needed. But once again, it is a social change, not a model change, which we should attempt to eliminate this bias.

Let me tell you of a lesser known example of model bias, which should not exist: the Hot Lotto fraud scandal. Now, this is not what you would usually call AI, but showcases the issue. Very briefly, in Lotto, a certain amount of randomly generated numbers are drawn, and if someone guesses all of them wins. In this fraud, the random number generator (which in our terms is the model) had a bias that on some specific days of the year made some numbers more likely than the others---so, if you knew the bias, you could greatly increase your chances of winning.

Other serious cases of model bias (more related to modern AI) which should never exist are the differences of performance of gesture recognition models depending on skin tone, or classification of the quality of a discourse depending on the accent.

Differentiating domain bias from model bias is fundamental if we want to achieve something even remotely resembling “commonsense reasoning” for an agent navigating our world. And it should be differentiated from discrimination. If I am reading a story where the two main protagonists are a Mexican and a German, it is reasonable to believe, in my mind’s eye, that the Mexican has darker skin and is shorter than the German. This is not discrimination. It just happens that in the world we live in, Mexicans are typically shorter and have darker skin than Germans. Nothing wrong with that. Are there exceptions? Sure! But lacking other evidence, the assumption is pretty reasonable.

Similarly, if one speaks about a Pope, it is perfectly correct the assume that is a male, merely because the rules of the catholic religion disallow any females to become popes. Dually, a pregnant human is necessarily female, given the laws of biology. These are not prejudices, these are domain bias.

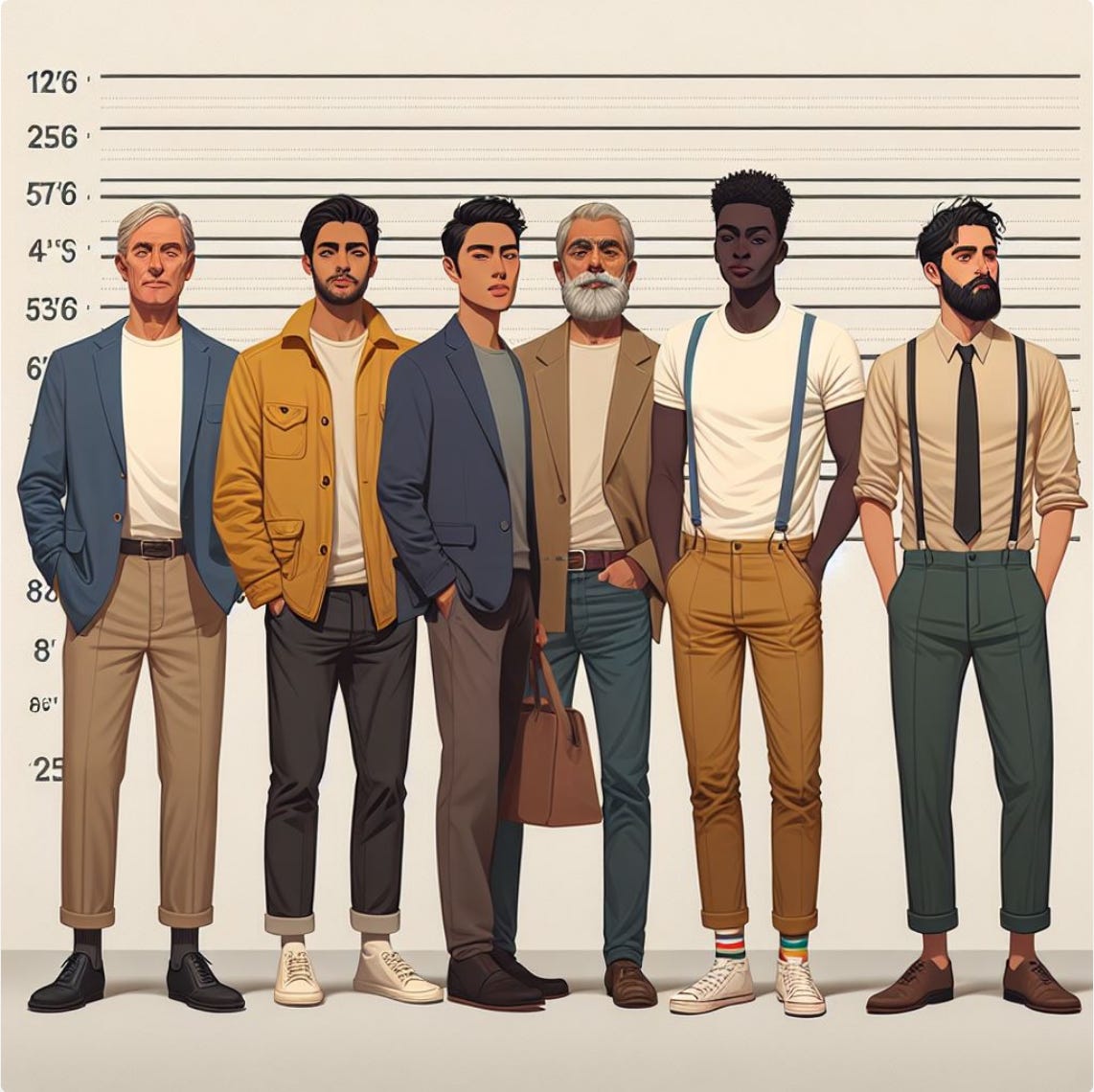

Another thing that many people complaining about bias in models ignore is statistics. I taught for five years a course on “foundations of computer science.” In that time, I had close to 1000 students, out of which less than 200 were female. If you ask me to imagine one average student from the course, I will imagine a male. This is reasonable for the pool of individuals. Many people complaining about bias do exactly this; they ask a model to provide one object, and complain that this one is not representative of the whole population. But finding actual bias requires a deeper analysis. If you ask me instead to describe a room with, say, 10 of my students, then there will be females (just not many of them). See the picture at the beginning of this post. I asked Dall-E to depict a height table of men from different ethnicities. Disregarding the weird numbers on the left, you will notice that they are all the same height. This is, without doubt, a representation of equality, but not a representation of reality. While many would interpret it as having removed bias, I interpret it as introducing error.

What I am trying to say here is that not all that skews is bias, and not all bias needs to be corrected. Of course, there is a very difficult step in identifying which kind of bias needs to be removed, and how to do so in a way that does not add errors to the model. But that is a totally different story.